How can we instead empower youth to take charge of their own mental health?

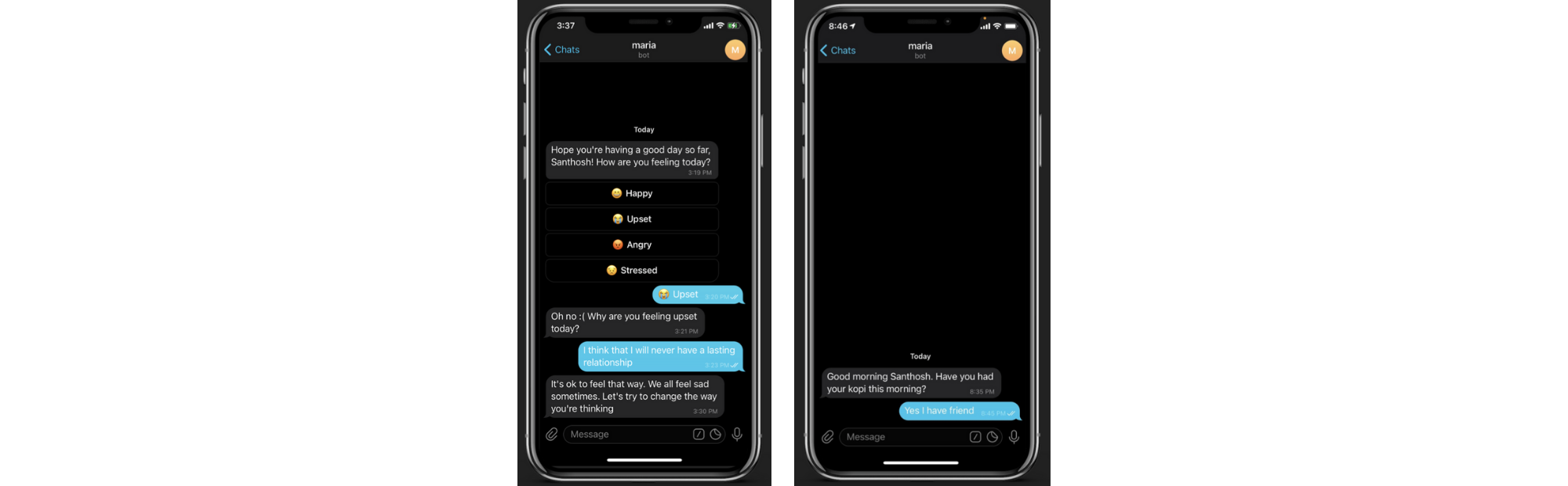

Currently, there is a tendency to focus on counselling when it comes to mental support. However, 91% of youth surveyed by Leaping Frog prefer anonymous texting when seeking help compared to face to face and phone conversations. How can we instead

Leaping Frog hopes to develop Blurple, a peer-to-peer cognitive reappraisal platform which aims to help users feel better by reframing their negative thoughts into positive ones. Users can share their negativ

Contact Details